One of my biggest Nuke-Python requests for TheFoundry, is the ability to grab the mouse X and Y position in the viewer. It would open up a whole new world of interactivity in toolsets, gizmos and custom python scripts. Thanks to a conversation in the Nuke Mailing List and Ben Dickson’s example code i have cooked up a little example tool that utilizes the cursor position to grab data and display a custom UI element.

First of all, i have not yet found a easy way to get the current position of the cursor. So to get around this i use the color sample bounding box to get the position. This however means that you must hold down CTRL to get it. On the upside, it does feel natural and means the user doesn’t trigger it accidentally.

#Get the bbox data

bboxinfo = nuke.activeViewer().node()['colour_sample_bbox'].value()

#Get the aspect of the input. Note that we sample input[0] for the width and height info!

aspect = float(self.node.input(0).width())/float(self.node.input(0).height())

#Convert relative coordinates into x and y coordinates

self.mousePosition = [(bboxinfo[0]*0.5+0.5)*self.node.input(0).width(),(((bboxinfo[1]*0.5)+(0.5/aspect))*aspect)*self.node.input(0).height()]

Then i need to know if the user does a mouseclick. For that i hook the main QApplication instance.

And to make sure that the event only fires when we are inside the actual viewer window, i grab the viewer widget using Ben Dickson’s code.

Now what this tool does, is that it samples the color that are at the cursor position. (I have created a little “dot” inside my gizmo that i call “sampler”.)

sampleR = self.node.node("sampler").sample('red',self.mousePosition[0],self.mousePosition[1])

sampleG = self.node.node("sampler").sample('green',self.mousePosition[0],self.mousePosition[1])

sampleB = self.node.node("sampler").sample('blue',self.mousePosition[0],self.mousePosition[1])

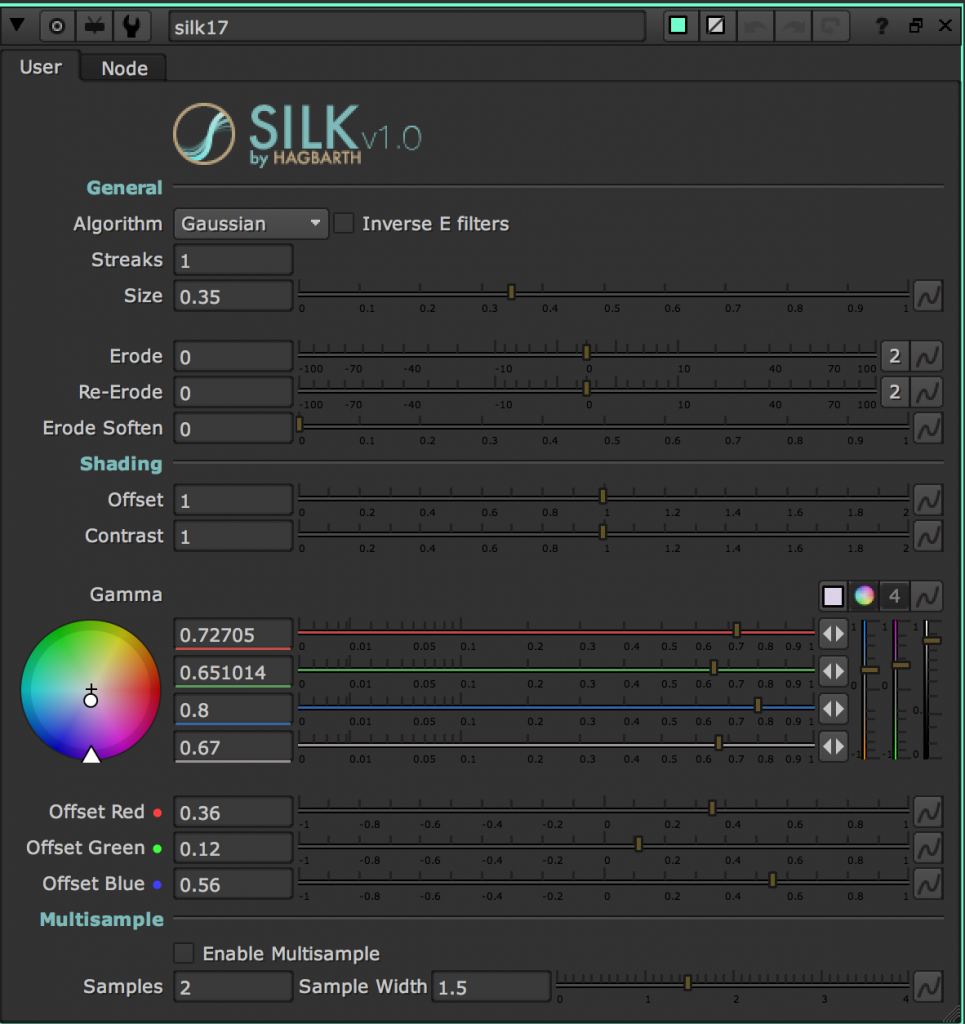

The Blinkscript that does the color manipulation is using a 2d color lattice, that does basic linear interpolation between each point in the grid.

Late night blink adventures pic.twitter.com/XblqhhpHD5

— Mads Hagbarth Lund (@xads) February 23, 2017

To get the best color seperation for my tool, I use a custom colorspace called HSP. This gives me a good seperation of color, and have e better ratio to luminance compared to HSV and HSL.

So i convert the 3 sample values into HSP space and find point on the color lattice that is nearest to the current sample.

Now using the relative cursor position i manipulate the HSP color data and convert it back into RGB space.

To create the UI element i use a GPUOp node, the node is being enabled every time the user does the Ctrl Click in the viewer and disabled again once the user release again.