StickIT – A Digital Makeup Gizmo for Nuke. from Hagbarth on Vimeo.

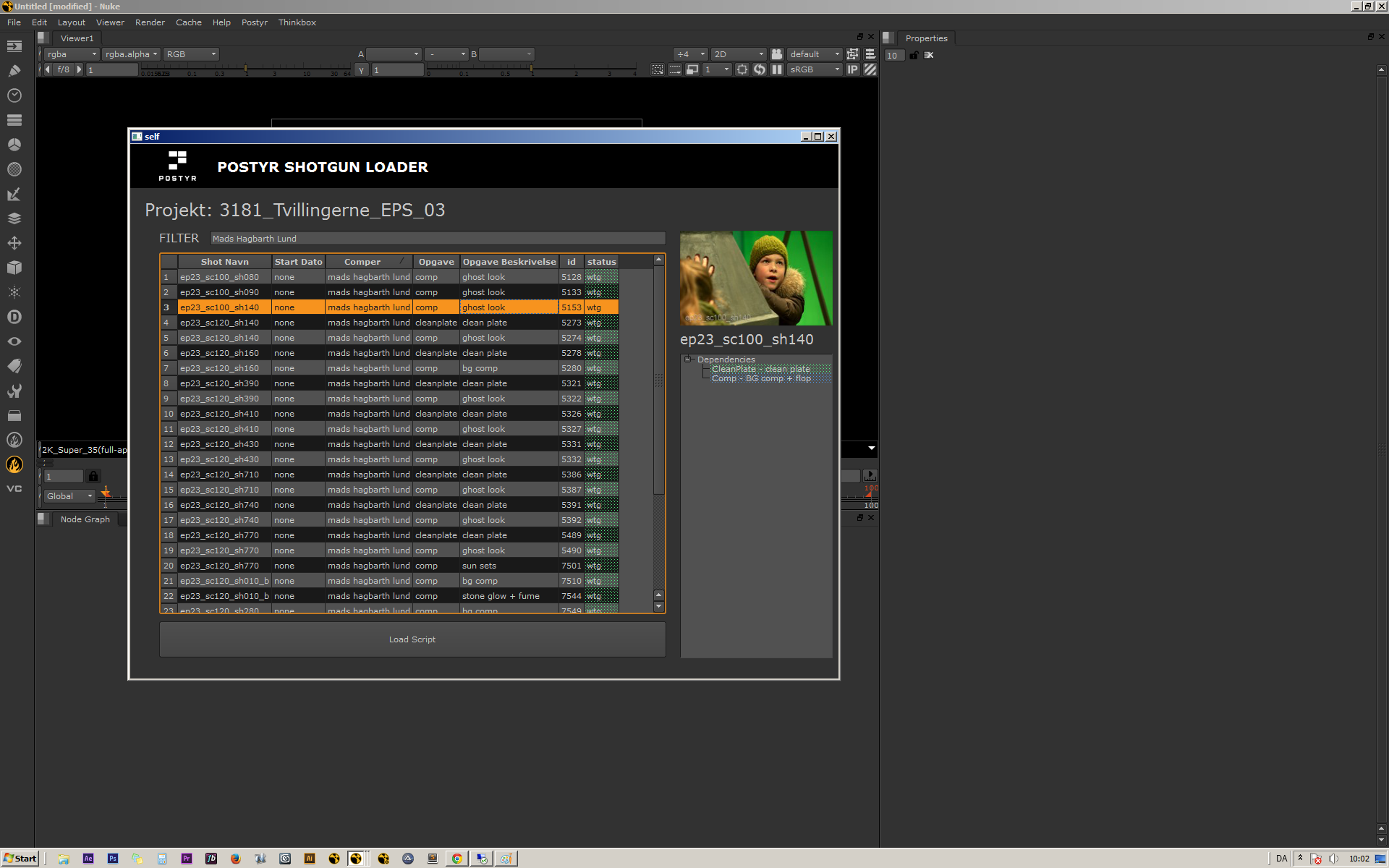

In summer 2013, I was tasked with finding a way to easily add digital makeup to actors facees across multiple scenes (with alot of twitchy motion and super shallow-focus closeups), quickly and with as little effort as possible. That was where i came up with StickIT, a 2D optical flow(ish) solution for “warp” matchmoving a image onto another.

Most digital makeup solutions involves a 2D planar track or a 3D matchmove both with their own respective strengths and weaknesses. When doing face makeup with a actor talking or doing other rapid motions, both 3D and planar track solutions can easily take hours before a solid track is in place, and this is where the combination of the 2 comes in.

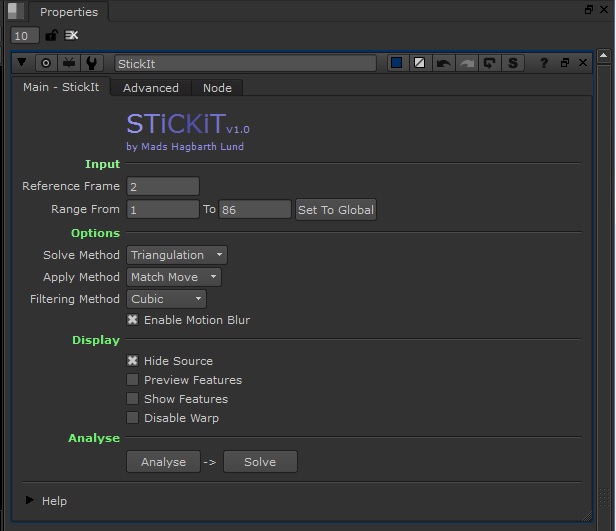

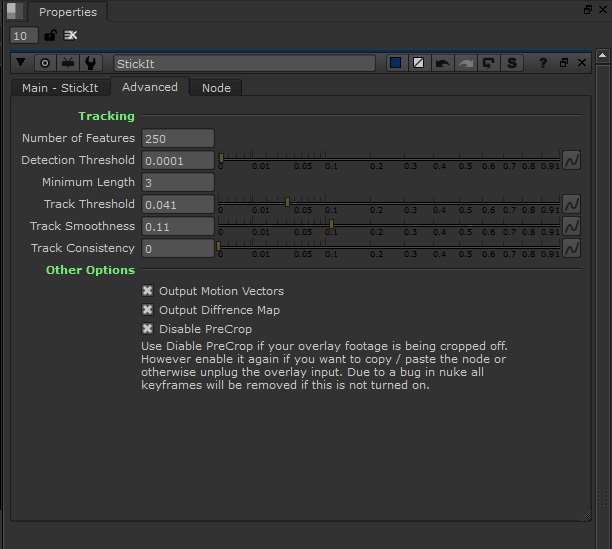

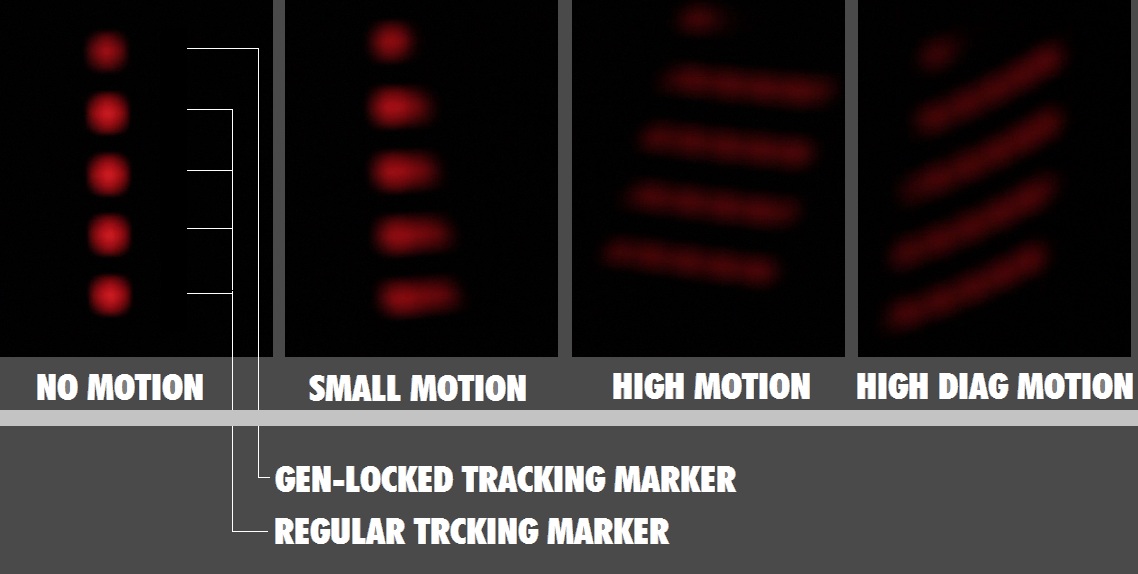

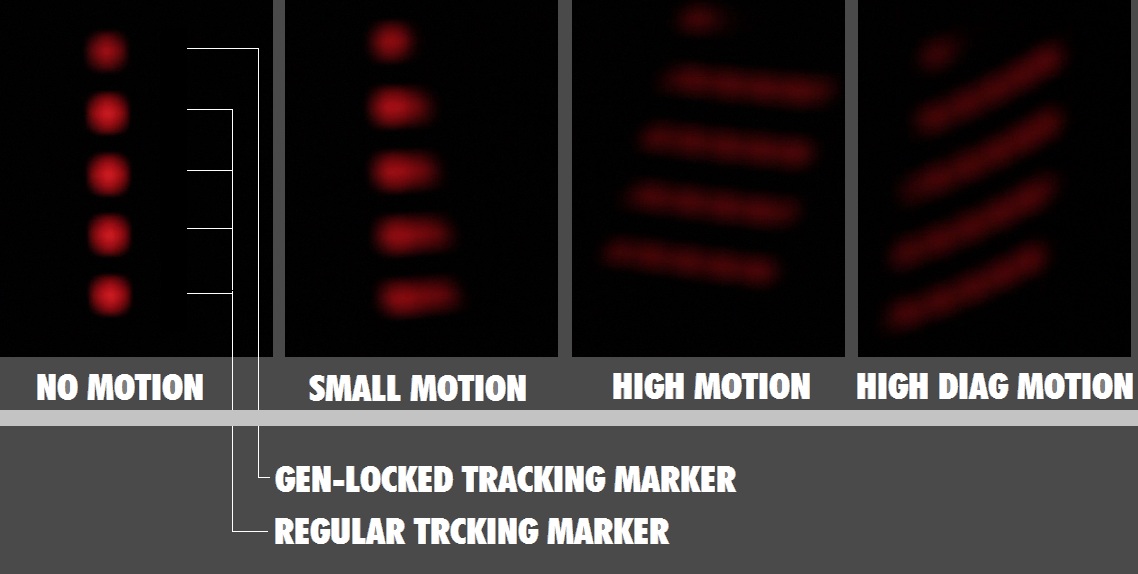

StickIT uses the Nuke Camera Tracker to generate a 2D pointcloud on the desired area. StickIT then generates a mesh of pins based on the density of points from the pointcloud. By triangulating the neareast points taking both movement and distance into account, StickIT calculates the best suitable motion for each and. It all becomes one big mesh that does’nt care for edges, regions, planes or perspective, but rather just the “optical flow” of the pixels underneath. This obviously have its disadvantages in certain situations, but makes it a simple 1 Click, set and forget approach.

Pulling a ST map into the warp you can generate a diffrence map and by that a ST map and Motion Vector map that can be used to not only creating motion blur and re-applying the effect multiple times in the same comp, but also gives the option to export the 2 and replicate the exact same results inside Fusion or AfterEffects for example.

With all that being said, StickIT is made to do things fast and dirty and won’t replace any of the other solutions if there is time for a proper matchmove. But when you are on a budget and got 100 more of these shots waiting in queue you might aswell just “do the clicks and se if it sticks”

The python source code took quite a few rounds of cleanup (the yellow parts) to bring down solve time to a few seconds.