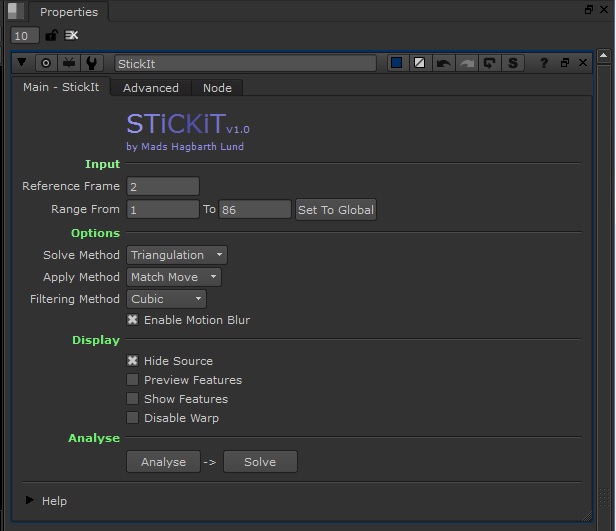

It is time for the first alpha release of StickIt

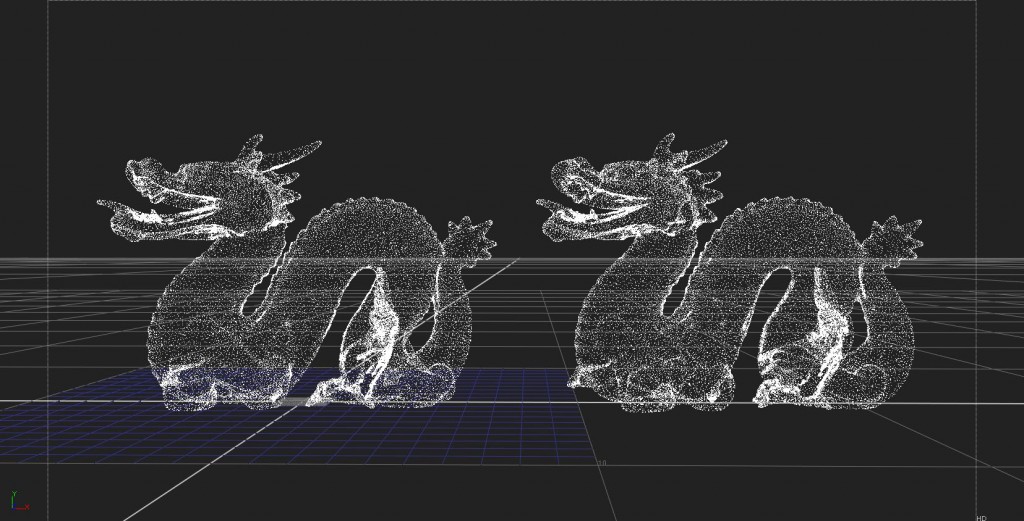

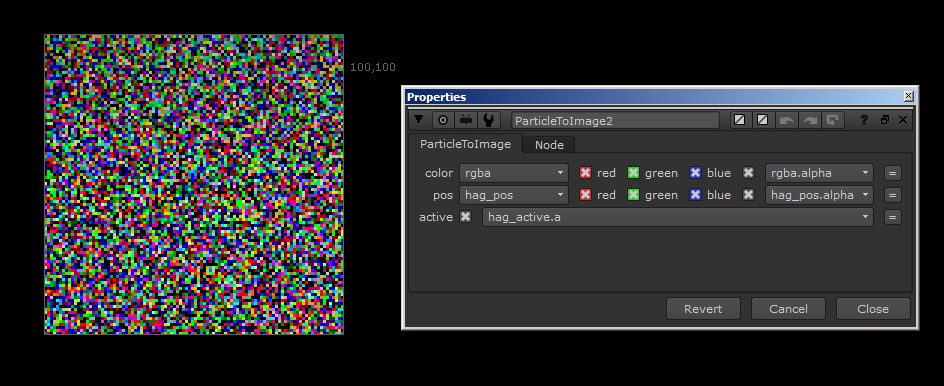

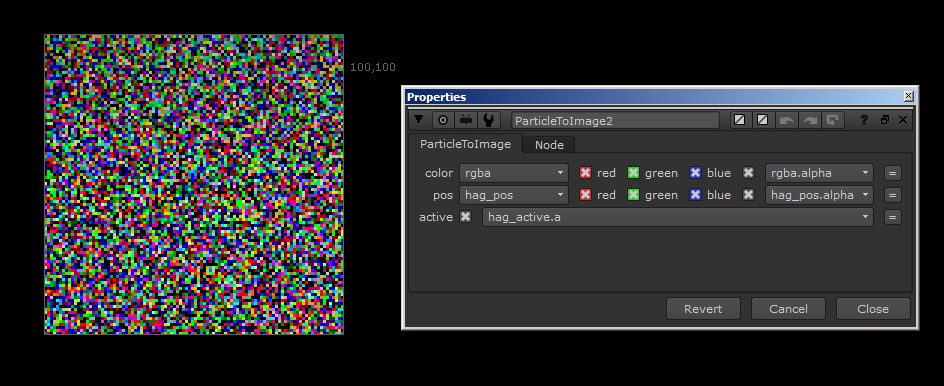

StickIt is a toolset for Nuke, that helps stick stuff onto non-ridgid surfaces.

I created the first concept for the tool during the R&D process for the Danish TV series Heartless. But due to manuscript changes it was never used. I later fully reworked the tool for a planned public release, but due to other more interesting personal projects I have not touched it for the last 6 months.

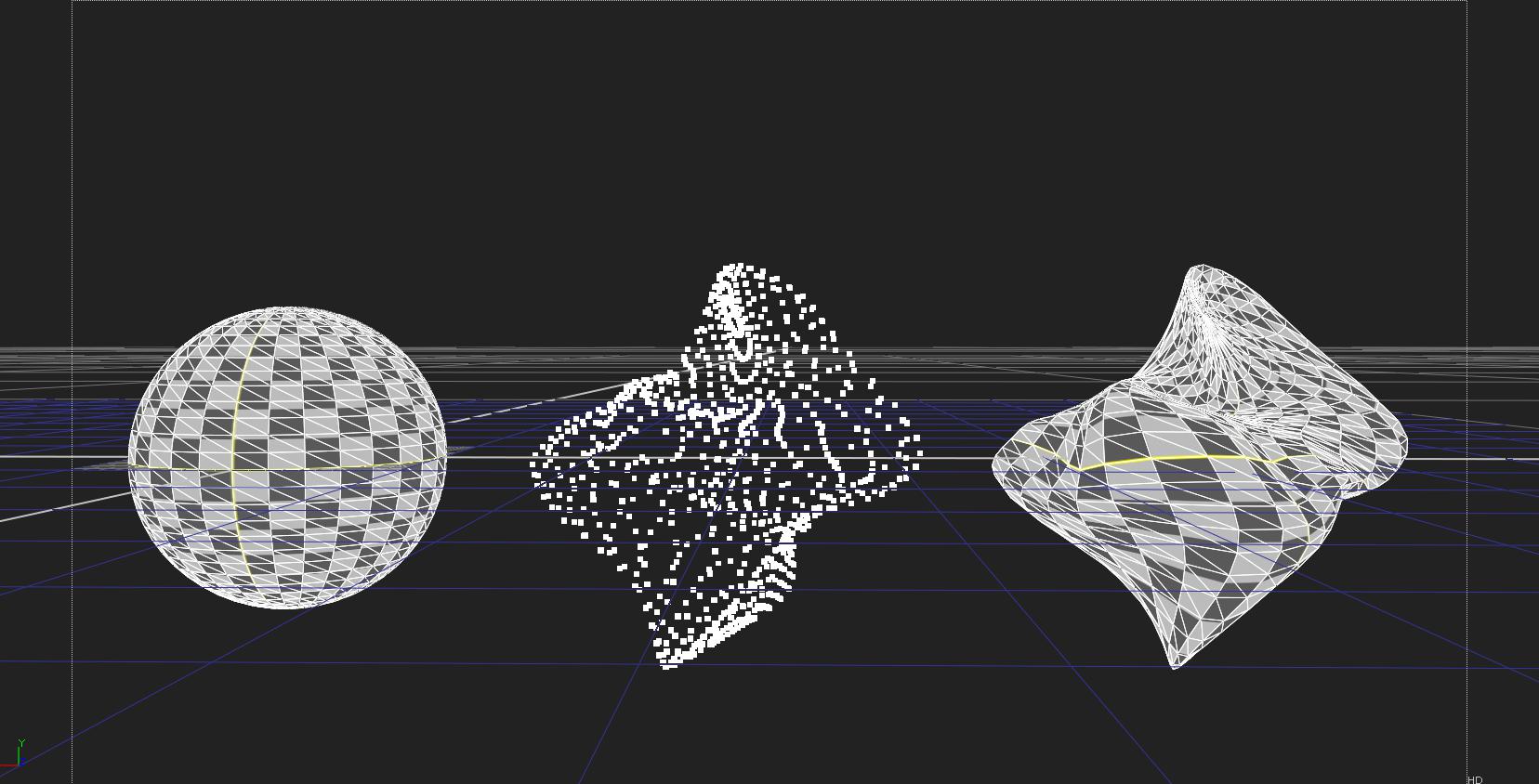

Currently 2 very important things are missing. First of all the ability to assist-animate the points, so that if they get off track you can manually nudge them into place. And then the whole point setup. Right now it uses the camera tracker points from the first tracked frame to warp the image, not only does this causes alot of redundancy as some points are cluttered together and also can give inferiror results compared to if the operator would be able to set the points before the solve.

This means that long sequences usually end up with a bad track at the end as points are gradually moved out of their original track path.

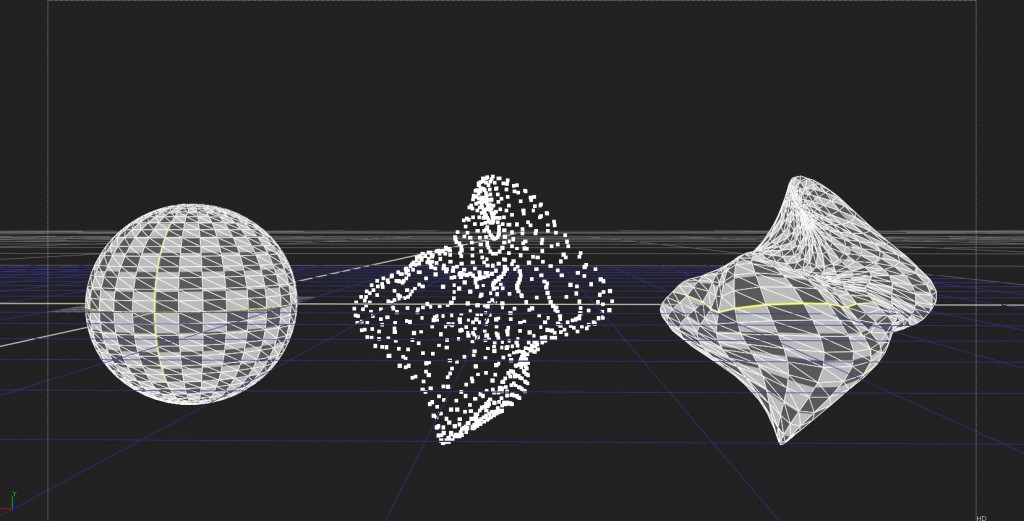

So to fix this, my plan is to add a option to manually add guide points, not only making the solve much faster but also making it more accurate and easier to control. On top of that i want to add a axis like system to the guide points so you can alter their position with a relative animation.

This also means that you can use it for doing single point tracks on a area that doesn’t have anything to track on.

Please watch this video before use:

To keep track of versioning and feedback i have decided to release it on Nukepedia only.

http://www.nukepedia.com/toolsets/transform/stickit-alpha