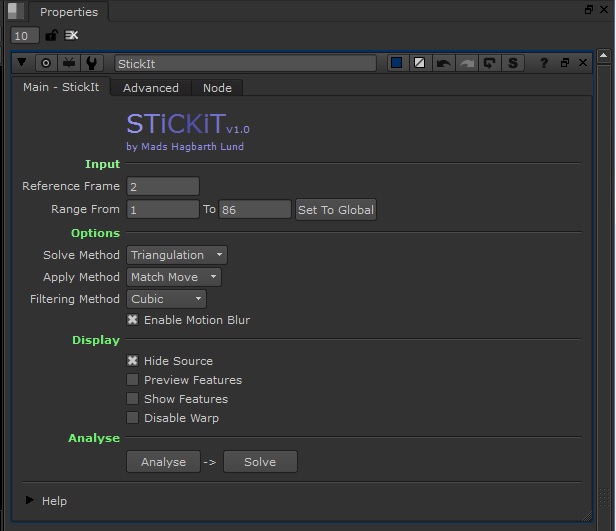

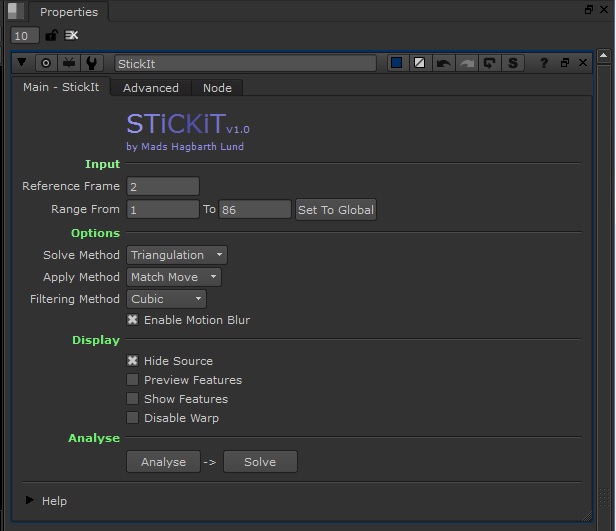

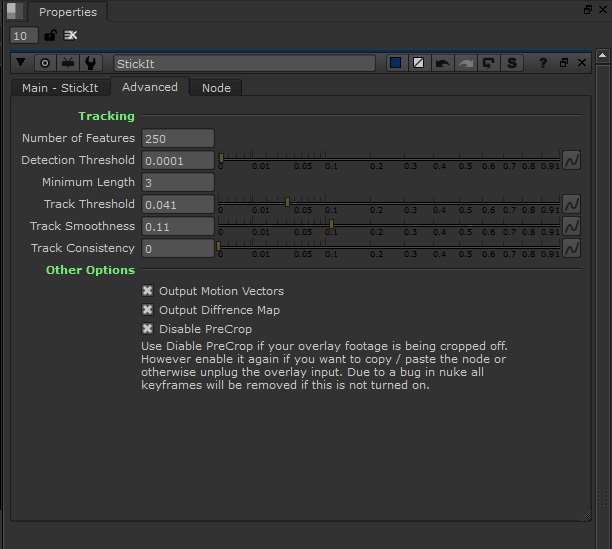

Update: Nuke 8 fixed / added some of this functionality.

The 3D Camera Tracker node in nuke is quite nice, but does have its limitations. One of the cool things is that you can export individual tracking features as “Usertracks” and use those as a 2D screenspace track. However you can only select tracks from 1 frame at a time and you can only export a maximum of 100 tracks in one single node. You cannot track single features manually and you cannot do object solving.

Well…. unless you use python =)

Extracting All FeatureTracks

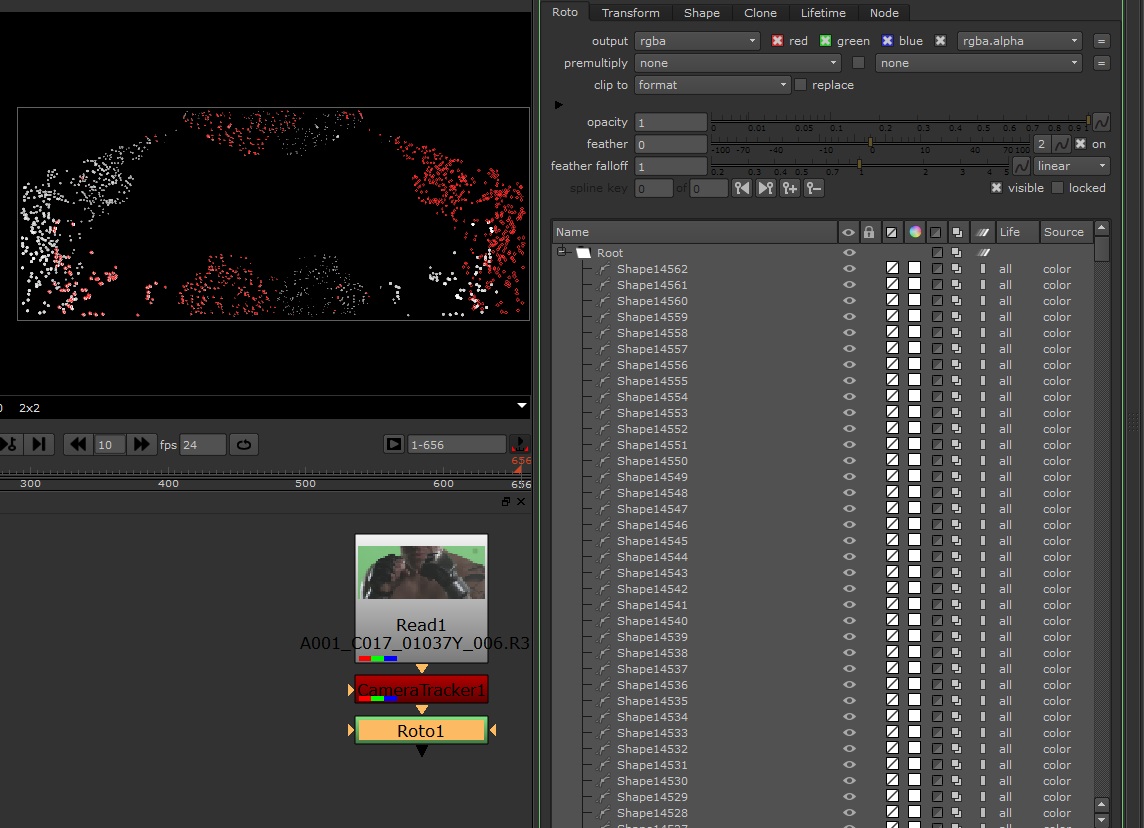

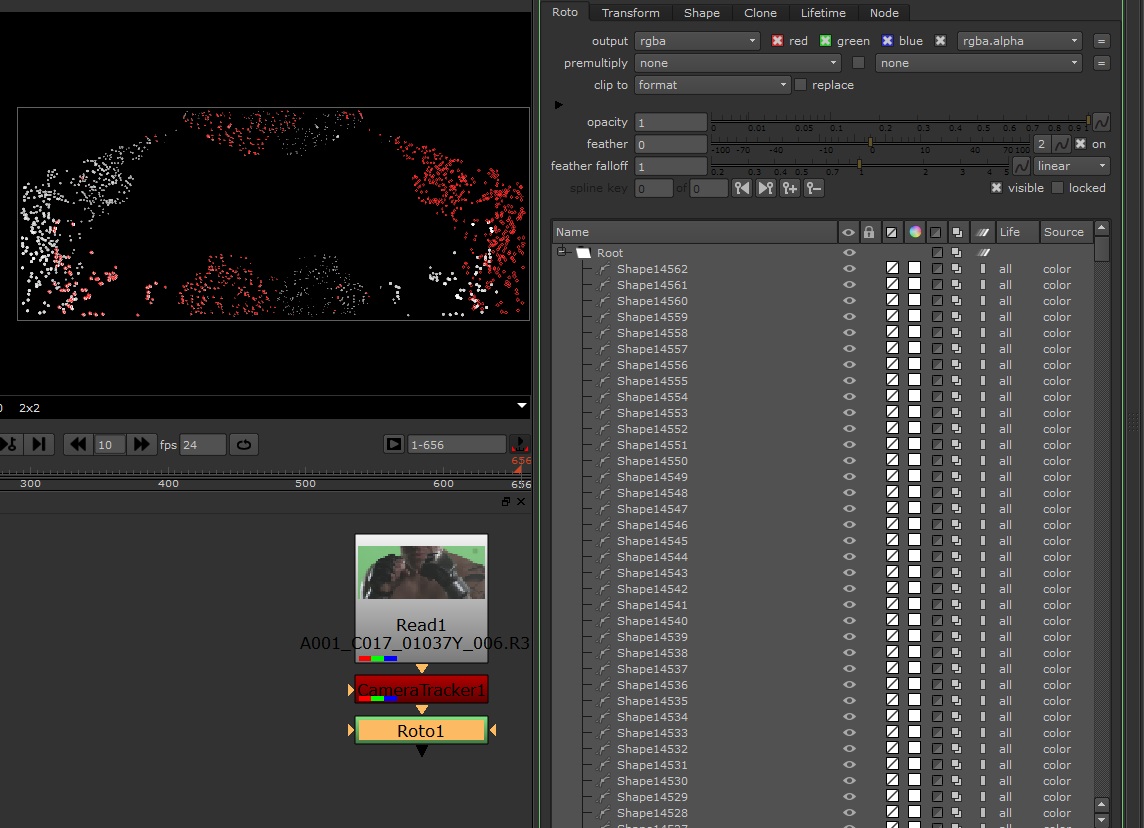

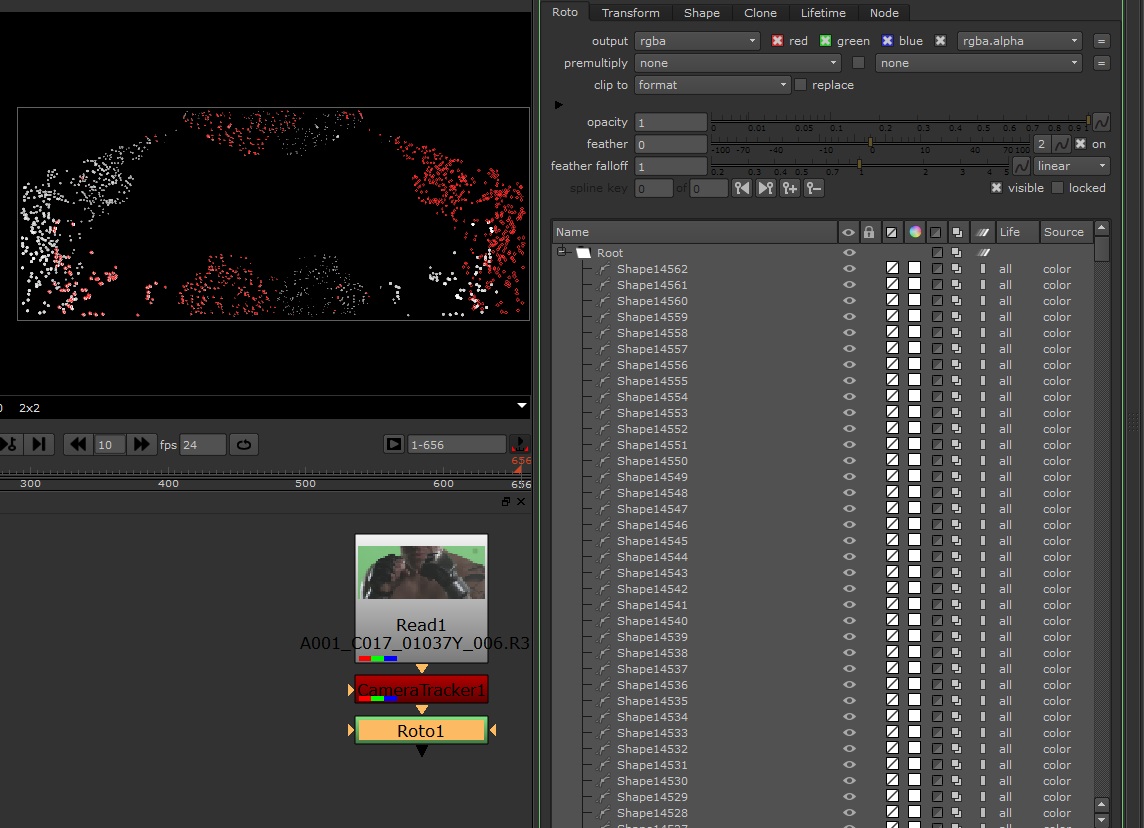

I have created a script that returns a full list of tracking points from a CameraTracker node. This can for example be fed into a rotopaint node to do something like this:

Nuke CameraTracker to Rotoshapes from Hagbarth on Vimeo.

Here is some sample code that will let you export all FeatureTracks from a CameraTracker node:

'''================================================================================

; Function: ExportCameraTrack(myNode):

; Description: Extracts all 2D Tracking Featrures from a 3D CameraTracker node (not usertracks).

; Parameter(s): myNode - A CameraTracker node containing tracking features

; Return: Output - A list of points formated [ [[Frame,X,Y][...]] [[...][...]] ]

;

; Note(s): N/A

;=================================================================================='''

def ExportCameraTrack(myNode):

myKnob = myNode.knob("serializeKnob")

myLines = myKnob.toScript()

DataItems = string.split(myLines, '\n')

Output = []

for index,line in enumerate(DataItems):

tempSplit = string.split(line, ' ')

if (len(tempSplit) > 4 and tempSplit[ len(tempSplit)-1] == "10") or (len(tempSplit) > 6 and tempSplit[len(tempSplit)-1] == "10"): #Header

#The first object always have 2 unknown ints, lets just fix it the easy way by offsetting by 2

if len(tempSplit) > 6 and tempSplit[6] == "10":

offsetKey = 2

offsetItem = 0

else:

offsetKey = 0

offsetItem = 0

#For some wierd reason the header is located at the first index after the first item. So we go one step down and look for the header data.

itemHeader = string.split(myLines, '\n')[index+1]

itemHeadersplit = string.split(itemHeader, ' ')

itemHeader_UniqueID = itemHeadersplit[1]

#So this one is rather wierd but after a certain ammount of items the structure will change again.

if len(itemHeadersplit) == 3:

itemHeader = string.split(myLines, '\n')[index+2]

itemHeadersplit = string.split(itemHeader, ' ')

offsetKey = 2

offsetItem = 2

itemHeader_FirstItem = itemHeadersplit[3+offsetItem]

itemHeader_NumberOfKeys = itemHeadersplit[4+offsetKey]

#Here we extract the individual XY coordinates

PositionList =[]

for x in range(2,int(itemHeader_NumberOfKeys)+1):

PositionList.append([int(LastFrame)+(x-2),string.split(DataItems[index+x], ' ')[2] ,string.split(DataItems[index+x], ' ')[3]])

Output.append(PositionList)

elif (len(tempSplit) > 8 and tempSplit[1] == "0" and tempSplit[2] == "1"):

LastFrame = tempSplit[3]

else: #Content

pass

return Output

import string #This is used by the code. Include!

#Example 01:

#This code will extract all tracks from the camera tracker and display the first item.

Testnode = nuke.toNode("CameraTracker1") #change this to your tracker node!

Return = ExportCameraTrack(Testnode)

for item in Return[0]:

print item

Remember if dealing with 1000+ features you need to bake keyframes and not use expressions as it will slow down the nuke script immensely.

Manual Single Feature Track

I did some additional tests with this, for example making the reverse of this script and giving me the option to add 2D tracks from a tracker node to the 3D Camera tracking node.

Object Solver

Now this is not related to the 2d tracking points but still a simple thing that should be included in the tracker.