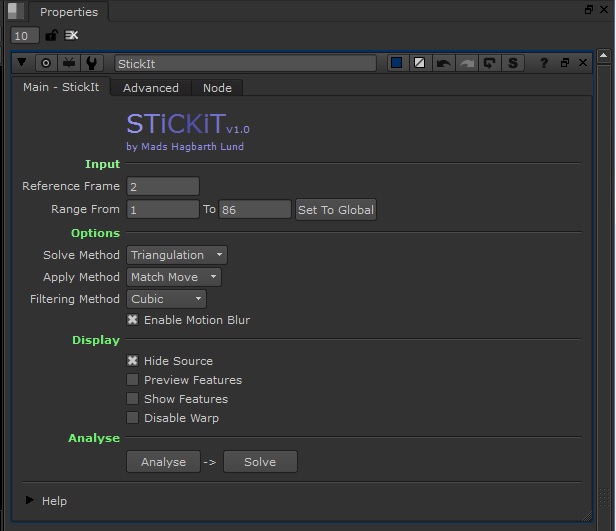

I have been working on a Auto-Projection mapper for Nuke.

If you have a 3D tracked scene, and build up some geometry with the modelbuilder, you need to pick a frame or frames you want to project from to get the best possible texture. This is usually a bit of guess work and some manual fixes. But I wanted to see if i could calculate the optimal frames to project from.

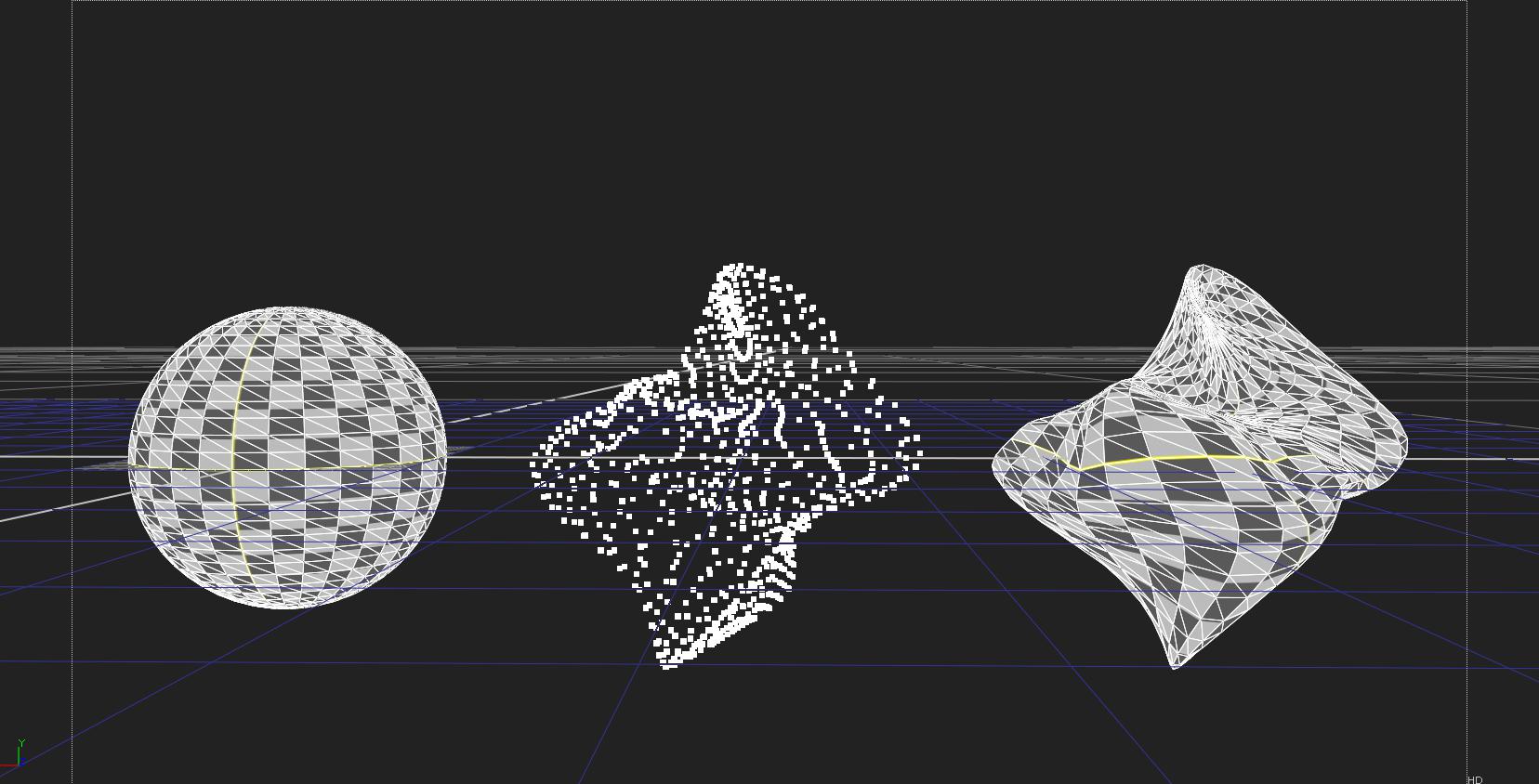

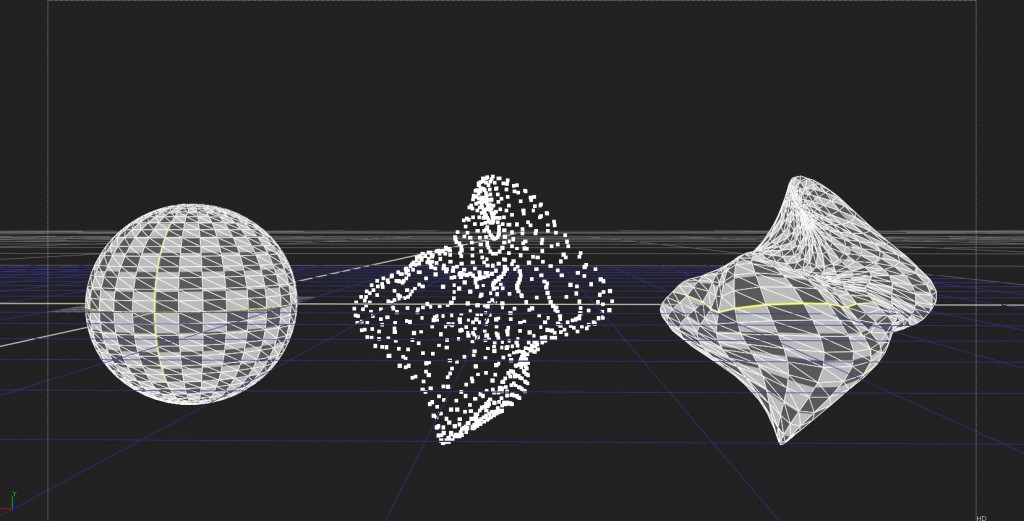

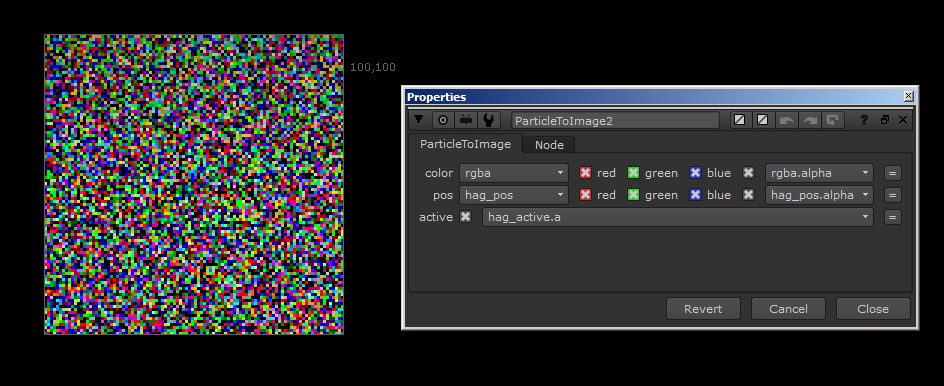

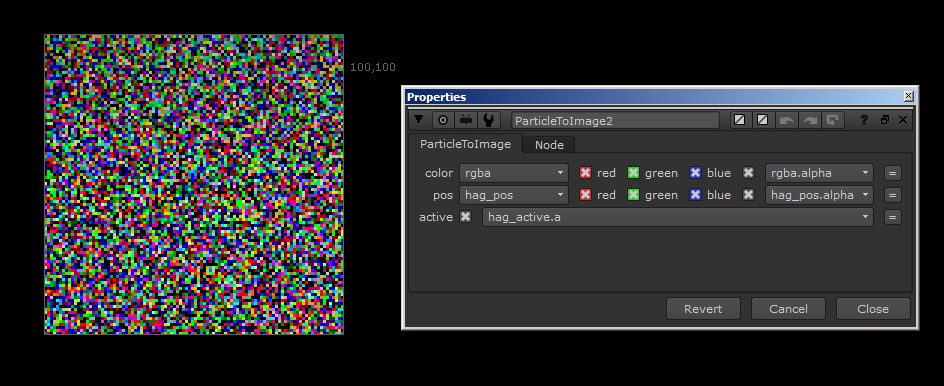

So i have created this toolset that calculates the most optimal frame to project from on every pixel of the UV, and also bakes that optimal texture down for use.

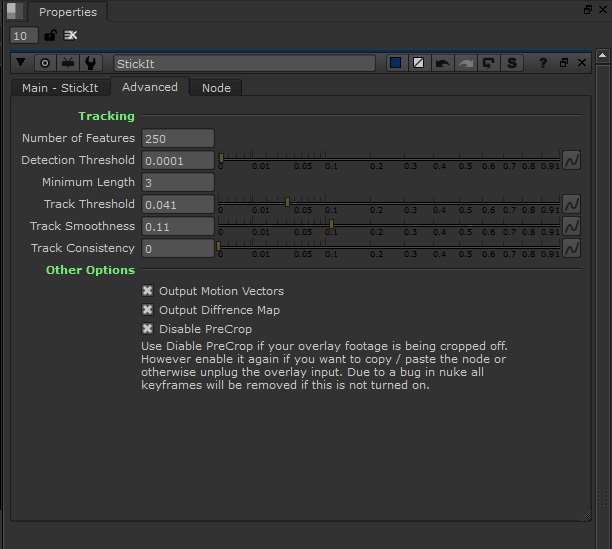

Based on the incidence (sqrt( 1.0 – pow( incidence , 2 ) ) to get the right falloff) , distance, field of view and aperture every pixel on every frame is given a “Quality Index” ranging from 0-1, and the best pixels are combined into the final projection map. If the shutter speed is known the motion-blur could be added to the equation leaving only focal length as a unknown.

Here is a little example video, sadly my 60d have a lot of rolling shutter so its hard to get a steady solve but i guess the video shows the idea quite well.

Nuke Auto-Projection Mapper from Hagbarth on Vimeo.

While this does have its drawbacks (focus, and unmapped intersecting objects), it will no matter what give a very good base texture that you can work out from and a list of frames for every pixel that gives you a overview of what frames are within the best range.

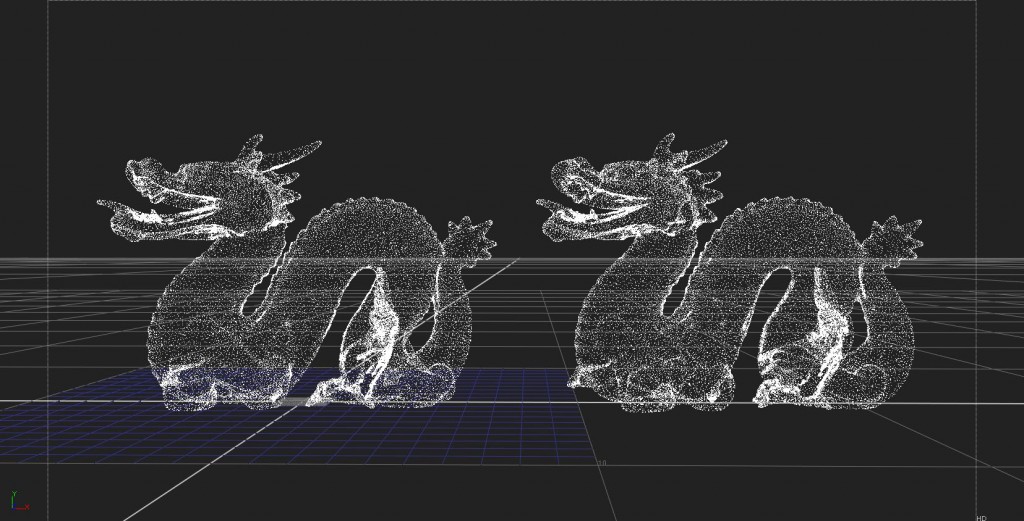

Another thing this could be usefull for is to texturize non-color Lidar scans. You could give it a series of frames or video from a stills camera and it would create a texture based on the optimal views.