Blur + Unpremult

There are many ways to fill in missing or unwanted sections of an image with content that matches the surrounding pixels.

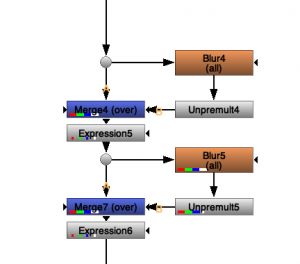

One of the most common methods among Nuke artist is to first blur the image, and then unpremultiplying it (turning the soft alpha area solid), and then adding it underneath the original image using the mask as a holdout.

This method sadly often have some streak artifacting (shown to the right->) and attempts to reduce the streaks often cause a great deal of extra computation time.

The other downside is that you often need to change the parameters depending on the size of the mask, so if the area is changing in size or you have multiple holes with different sizes you will need to adjust or split the work up into multiple sections.

Downsampling

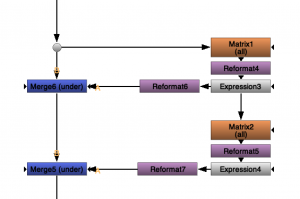

There is another method which is quite fast, simple and also independent of the mask radius. This method revolves around incrementally reducing the image size with an average filter, and for each incremental step, adding it back underneath the original by upsampling with a soft filter method.

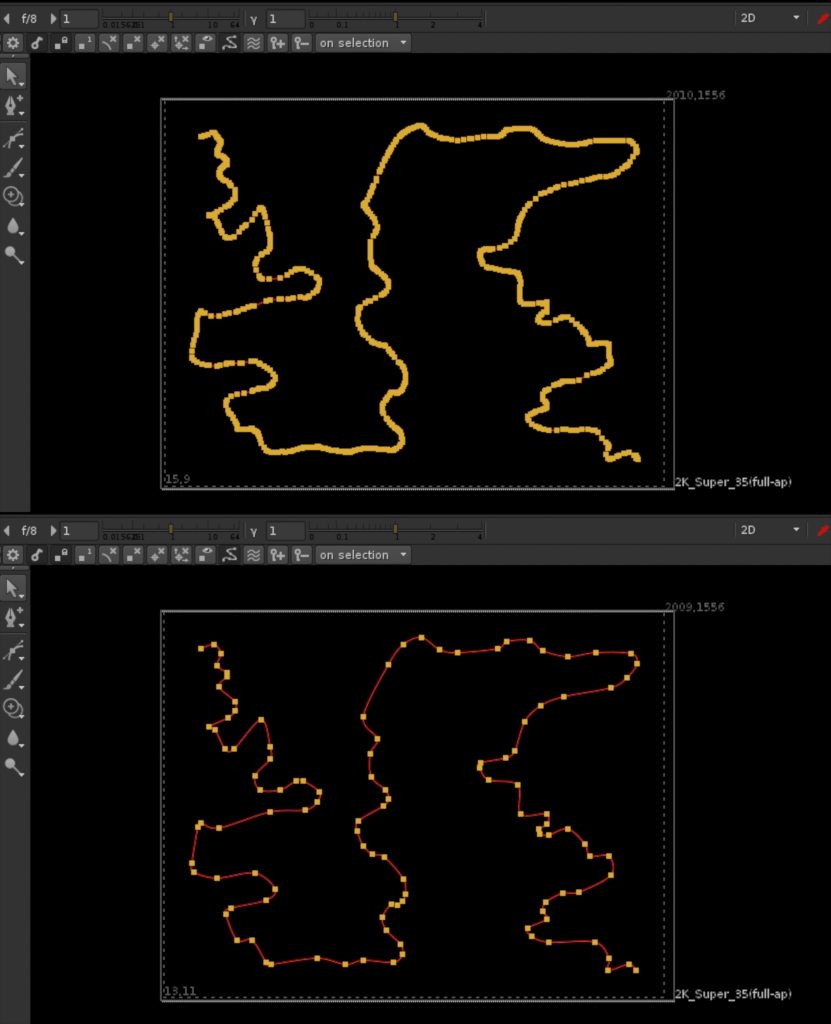

- Let's start off by taking the main image and making a hole it in with a mask.

- For each pixel we adding the 2x2 neighbouring pixels

- We now reduce the size of the image in half with a impulse filter leaving out every 2nd pixel.

- Then we unpremultiply all colors (including the alpha)

- We can now take a copy image the image and upres it to the original resolution and add it underneath the main image.

- We now apply the same process over and over again untill we reach a image that can no longer be halfed in size.

I would have assumed that the best way to do this would be to do the averaging and reformating in the same step to avoid having to make calculations that we throw out anyway. But after trying with blinkscript, expression nodes, transforms and matrecies i found that using the matrix node and throwing away the data was giving the best performance.

Taking it further

One of the thing you will notice right away is the logarithmic look of the sampling caused by the logarithmic stepping in resolutions. We go quickly from a high frequency image to a low frequency image.

We can remedy this by running each level all the way from the base resolution. Doing this all the way to the smallest resolution takes quite a bit more processing than the base version, so we would most likely be best off by adding a parameter to choose how far down the sampling tree we want to go before we switch back to the normal version.

Want to try it out yourself? Download a example implementation here: http://www.hagbarth.net/nuke/FFfiller_v01.nk